Privacy and Control

In January, Facebook Chief Executive Mark Zuckerberg declared the age of privacy to be over. A month earlier, Google Chief Eric Schmidt expressed a similar sentiment. Add Scott McNealy's and Larry Ellison's comments from a few years earlier, and you've got a whole lot of tech CEOs proclaiming the death of privacy -- especially when it comes to young people.

It's just not true. People, including the younger generation, still care about privacy. Yes, they're far more public on the Internet than their parents: writing personal details on Facebook, posting embarrassing photos on Flickr and having intimate conversations on Twitter. But they take steps to protect their privacy and vociferously complain when they feel it violated. They're not technically sophisticated about privacy and make mistakes all the time, but that's mostly the fault of companies and Web sites that try to manipulate them for financial gain.

To the older generation, privacy is about secrecy. And, as the Supreme Court said, once something is no longer secret, it's no longer private. But that's not how privacy works, and it's not how the younger generation thinks about it. Privacy is about control. When your health records are sold to a pharmaceutical company without your permission; when a social-networking site changes your privacy settings to make what used to be visible only to your friends visible to everyone; when the NSA eavesdrops on everyone's e-mail conversations -- your loss of control over that information is the issue. We may not mind sharing our personal lives and thoughts, but we want to control how, where and with whom. A privacy failure is a control failure.

People's relationship with privacy is socially complicated. Salience matters: People are more likely to protect their privacy if they're thinking about it, and less likely to if they're thinking about something else. Social-networking sites know this, constantly reminding people about how much fun it is to share photos and comments and conversations while downplaying the privacy risks. Some sites go even further, deliberately hiding information about how little control -- and privacy -- users have over their data. We all give up our privacy when we're not thinking about it.

Group behavior matters; we're more likely to expose personal information when our peers are doing it. We object more to losing privacy than we value its return once it's gone. Even if we don't have control over our data, an illusion of control reassures us. And we are poor judges of risk. All sorts of academic research backs up these findings.

Here's the problem: The very companies whose CEOs eulogize privacy make their money by controlling vast amounts of their users' information. Whether through targeted advertising, cross-selling or simply convincing their users to spend more time on their site and sign up their friends, more information shared in more ways, more publicly means more profits. This means these companies are motivated to continually ratchet down the privacy of their services, while at the same time pronouncing privacy erosions as inevitable and giving users the illusion of control.

You can see these forces in play with Google's launch of Buzz. Buzz is a Twitter-like chatting service, and when Google launched it in February, the defaults were set so people would follow the people they corresponded with frequently in Gmail, with the list publicly available. Yes, users could change these options, but -- and Google knew this -- changing options is hard and most people accept the defaults, especially when they're trying out something new. People were upset that their previously private e-mail contacts list was suddenly public. A Federal Trade Commission commissioner even threatened penalties. And though Google changed its defaults, resentment remained.

Facebook tried a similar control grab when it changed people's default privacy settings last December to make them more public. While users could, in theory, keep their previous settings, it took an effort. Many people just wanted to chat with their friends and clicked through the new defaults without realizing it.

Facebook has a history of this sort of thing. In 2006 it introduced News Feeds, which changed the way people viewed information about their friends. There was no true privacy change in that users could not see more information than before; the change was in control -- or arguably, just in the illusion of control. Still, there was a large uproar. And Facebook is doing it again; last month, the company announced new privacy changes that will make it easier for it to collect location data on users and sell that data to third parties.

With all this privacy erosion, those CEOs may actually be right -- but only because they're working to kill privacy. On the Internet, our privacy options are limited to the options those companies give us and how easy they are to find. We have Gmail and Facebook accounts because that's where we socialize these days, and it's hard -- especially for the younger generation -- to opt out. As long as privacy isn't salient, and as long as these companies are allowed to forcibly change social norms by limiting options, people will increasingly get used to less and less privacy. There's no malice on anyone's part here; it's just market forces in action. If we believe privacy is a social good, something necessary for democracy, liberty and human dignity, then we can't rely on market forces to maintain it. Broad legislation protecting personal privacy by giving people control over their personal data is the only solution.

This essay originally appeared on Forbes.com.

http://www.forbes.com/2010/04/05/google-facebook-twitter-technology-security-10-privacy.html or http://tinyurl.com/yb3qkj7

skip to main |

skip to sidebar

Bill & Annie

Art Hall & Rusty

NUFF SAID.......

OOHRAH

ONCE A MARINE,ALWAYS A MARINE

GIVING BACK

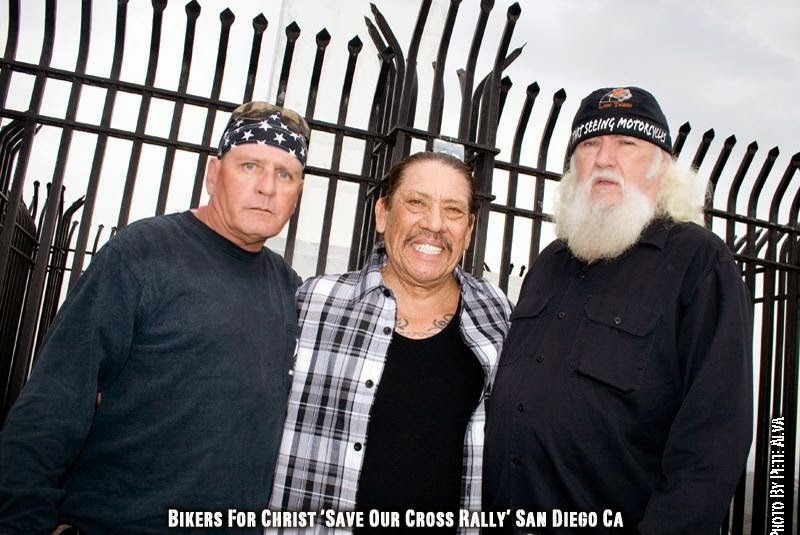

MOUNT SOLEDAD

BIKINI BIKE WASH AT SWEETWATER

FRIENDS

BILL,WILLIE G, PHILIP

GOOD FRIENDS

hanging out

brothers

GOOD FRIENDS

Good Friends

Hanging Out

Bill & Annie

Art Hall & Rusty

Art Hall & Rusty

NUFF SAID.......

NUFF SAID......

Mount Soledad

BALBOA NAVAL HOSPITAL

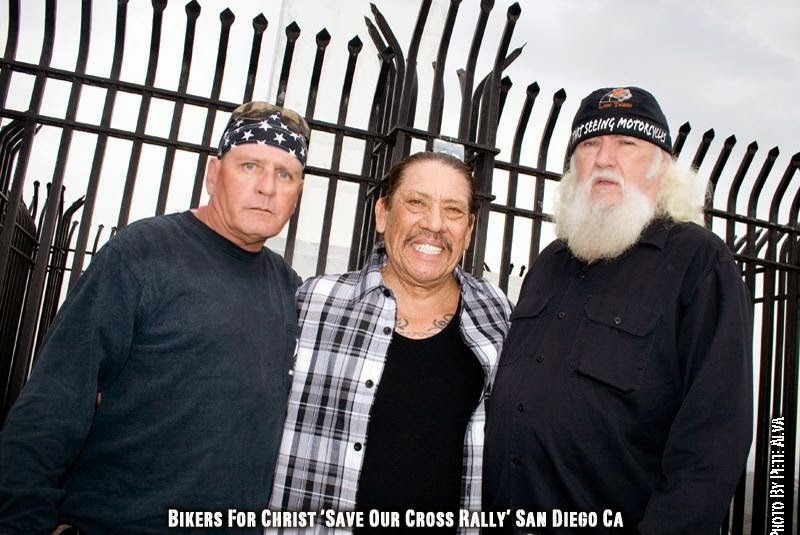

RUSTY DANNY

ANNIE KO PHILIP

PHILIP & ANNIE

OUT & ABOUT

OOHRAH...

OOHRAH

ONCE A MARINE,ALWAYS A MARINE

ONCE A MARINE,ALWAYS A MARINE

American Soldier Network GIVING BACK

GIVING BACK

CATHY & BILL

PHILIP & DANNY & BILL

MOUNT SOLEDAD

bills today

EMILIO & PHILIP

WATER & POWER

WATER & POWER

bootride2013

BIKINI BIKE WASH AT SWEETWATER

ILLUSION OPEN HOUSE

FRIENDS

GOOD FRIENDS

BILL,WILLIE G, PHILIP

GOOD FRIENDS

GOOD FRIENDS

Friends

- http://www.ehlinelaw.com/losangeles-motorcycleaccidentattorneys/

- Scotty westcoast-tbars.com

- Ashby C. Sorensen

- americansoldiernetwork.org

- blogtalkradio.com/hermis-live

- davidlabrava.com

- emiliorivera.com/

- http://kandymankustompaint.com

- http://pipelinept.com/

- http://womenmotorcyclist.com

- http://www.ehlinelaw.com

- https://ammo.com/

- SAN DIEGO CUSTOMS

- www.biggshd.com

- www.bighousecrew.net

- www.bikersinformationguide.com

- www.boltofca.org

- www.boltusa.org

- www.espinozasleather.com

- www.illusionmotorcycles.com

- www.kennedyscollateral.com

- www.kennedyscustomcycles.com

- www.listerinsurance.com

- www.sweetwaterharley.com

Hanging out

hanging out

Good Friends

brothers

GOOD FRIENDS

EMILIO & SCREWDRIVER

GOOD FRIENDS

Danny Trejo & Screwdriver

Good Friends

Navigation

Welcome to Bikers of America, Know Your Rights!

“THE BIKERS OF AMERICA, THE PHIL and BILL SHOW”,

A HARDCORE BIKER RIGHTS SHOW THAT HITS LIKE A BORED AND STROKED BIG TWIN!

ON LIVE TUESDAY'S & THURDAY'S AT 6 PM P.S.T.

9 PM E.S.T.

CATCH LIVE AND ARCHIVED SHOWS

FREE OF CHARGE AT...

BlogTalkRadio.com/BikersOfAmerica.

Two ways to listen on Tuesday & Thursday

1. Call in number - (347) 826-7753 ...

Listen live right from your phone!

2. Stream us live on your computer: http://www.blogtalkradio.com/bikersofamerica.

A HARDCORE BIKER RIGHTS SHOW THAT HITS LIKE A BORED AND STROKED BIG TWIN!

ON LIVE TUESDAY'S & THURDAY'S AT 6 PM P.S.T.

9 PM E.S.T.

CATCH LIVE AND ARCHIVED SHOWS

FREE OF CHARGE AT...

BlogTalkRadio.com/BikersOfAmerica.

Two ways to listen on Tuesday & Thursday

1. Call in number - (347) 826-7753 ...

Listen live right from your phone!

2. Stream us live on your computer: http://www.blogtalkradio.com/bikersofamerica.

Good Times

Hanging Out

Key Words

- about (3)

- contact (1)

- TENNESSEE AND THUNDER ON THE MOUNTAIN (1)

- thinking (1)

- upcoming shows (2)

Blog Archive

-

▼

2010

(4242)

-

▼

April

(425)

- Harassment

- Who doesn’t tell the Motorcycle Helmet Story–the m...

- Latest on Vertical License Plate in Florida

- Motorcycle Training Bill Is Approved By The Senate

- lake-elsinore-police-crack-down-on-motorcycle-safety

- A Complaint about a cop

- ABATE of Florida Elections

- New Arizona immigration law and ID demands

- An Applicable Perspective

- Sask. government revising gang colour ban

- Hells Angels bike blessing

- Early motorcycle season concerns police

- Rogue endorses Frankie Kennedy for President of AB...

- ASMI’s Road Guardian Program creates “Thrash”

- Owner latest arrested after pizzeria brawl

- Motorcyclists challenge seizure of alleged illegal...

- Alleged Angel jailed on gun, drug charges

- Harley-Davidson has brought back some workers to N...

- New Study: Motorcycle Deaths Down Dramatically in ...

- Street brawl points to new outlaw motorcycle gang ...

- Bikers ride to support abused children

- ‘Web of deceit’ deserves stiff penalty: Crown

- Sask. government revising gang colour ban

- Hells Angels clubhouse days numbered?

- Betsy,

- The Gorilla Story or What is wrong with MRO's

- Mongols Patch Case

- Traffic collision deaths drop in state, CHP says

- Thunder Beach motorcycle rally rolls into town

- Bikers gather for motorcycle safety

- Charity ride denied ending at Old Lyme beach

- Tonight on the Biker Lowdown: Geneva & the Extermi...

- If you jump onto Harley, you’ll arrive ‘late to th...

- "E-MAIL BLAST" local 6

- Henderson PD Complaint

- DATE CORRECTION DATE CORRECTION

- BOLT talks about Rights and your abilities

- MMA UPDATE - Lynnfield EPA Bylaw defeated!

- Cops Charged & Things they don't want the public t...

- Russell Doza

- Due Process is a right, RIGHT? Watch out for our B...

- SB 435

- MMA Call to Action - Prevent a federal helmet law!

- Town Hall Freedom Rally

- Motorcyclist deaths drop; sour economy cited

- Bikers of Lesser Tolerance Announces New Chapter i...

- Man Charged With Homicide For Motorcycle Crash(WCC...

- Every day is Earth Day when you ride a motorcycle

- MMA Call to Action - Prevent a federal helmet law!

- False Assumptions About Motorcycle Noise and the A...

- SCORPIONS Added To STURGIS MOTORCYCLE RALLY

- Bridgestone Motorcycles to be showcased as Classic...

- Pagan's defendants plead no contest to conspiracy

- Local Winnipeg Free Press - PRINT EDITION Sentence...

- Motorcyclist deaths drop; sour economy cited

- U.S. Defenders Take Oregon Motorcycle Rights to th...

- Bikers 'wild' about helping others

- Group Raises Awareness About Motorcycle Safety

- Ill Highwaymen member will be tried in absentia

- Legislation targets criminal groups

- Bikers to dedicate wall to fallen comrades

- Harley Davidson 'chop shop' in Damascus

- Motorcycle Training Could Be Mandatory Related Pol...

- mandatory rider ed bill passed

- US Defender Program

- Helmet warning: A lone-officer motorcycle helmet c...

- CA: Police to conduct motorcycle safety enforcemen...

- Kouts biker trial opens next week

- Two facing charges in assault at 22nd Street Disco...

- Hogs, Fat Boys blessed to ride in New London

- Brawl or no brawl? Only bike gangs know the truth

- FOR IMMEDIATE ACTIONMMA Call to Action - Prevent a...

- MMA Legislative Alert-Falmouth Noise Bylaw

- MORE BULLSHIT OUT OF WASH. DC

- Start Planning for International Female Ride Day A...

- Metro Police To Begin Using Gang Injunctions Gang ...

- May is Motorcycle Awareness Month… schedule a ride...

- Londonderry man arrested in shooting Police say by...

- OFF THE WIRE http://www.google.com/hostednews/ap/a...

- And finally some good news

- Rival motorcycle gangs brawl in SE Minn

- Bikes Are Back In The Big Easy!

- LA_Calendar_Motorcycle_Show

- 13 Pagans defendants avoid federal charges via ple...

- LOCAL 6 INFO

- Women in the Wind Women's Ride Day

- Officers Ride for a Good Cause

- Attacks leave Hemet reeling

- Highwaymen defendant suffers heart attack; mistria...

- Man arrested in connection with weekend NH shooting

- Bikers stimulate small-town economies

- Harley-Davidson revs Sturgis Motorcycle Rally

- Gotta love Ted Nugent

- Gang Evidence Resulted in Reversal of Murder Convi...

- Law mandates noise levels, vendors, drinks

- Class Action Lawsuit brought against Census Bureau

- Biker’s Rally for Justice

- “THE BIKERS OF AMERICA,THE PHIL AND BILL SHOW”

- Bikers Against Discrimination (B.A.D.)

- WASHINGTON:Washington Trooper clocks motorcycle at...

-

▼

April

(425)

Bikers of America, Know Your Rights!... Brought to you by Phil and Bill

Philip, a.k.a Screwdriver, is a proud member of Bikers of Lesser Tolerance, and the Left Coast Rep

of B.A.D (Bikers Against Discrimination) along with Bill is a biker rights activist and also a B.A.D Rep, as well, owner of Kennedy's Custom Cycles